Troubleshooting Analysis Services performance issues is hard. In complex and/or high concurrency environments (e.g. scale-out solutions) it can be a nightmare. Throw in a transient issue (i.e. one that can’t be reproduced on command or at some predictable time) that only occurs in production and you’re only option is to fire up some traces and let them run until the issue reoccurs. This can result in some pretty large trace logs that then need to be reviewed and the only (efficient) way to do this is to load the data into a table and run some queries.

This post covers several methods that can be used to load Analysis Services extended events trace data into a table for analysis. The general topic has been blogged about several times in the past by various SSAS geeks (here’s a good one by Mark Vaillancourt). However, this particular post shares a new (at least it was to me) method that I suspect isn’t widely used as it involves .NET (gross) and is only really warranted when there is a lot of trace data to load and/or you need to load it as fast as possible.

Background

Earlier this year, I worked with a client that had one of the types of issues described above. With no other options we were forced to run extended events traces on all 6 of the servers in their scale-out query server architecture as the issue could occur on any of them and we wanted to capture the next occurrence as this was a hot issue for the client.

Luckily the first occurrence happened just a few hours after tracing was turned on. However, after reviewing the trace results, we realized that we needed to add a few more events to the trace. This was a risk we were aware of (i.e. not capturing enough data) but since it was production we wanted to keep the trace as light as possible…at least until we had a better idea of what we were looking for. Unfortunately, after we updated the trace definition to include the rest of the trace events and turned it back on, it was a long ~36 hours before the issue reoccurred.

To give you a bit more context, this was a heavy load system generating ~100MB of trace data every 5 minutes on each query server during peak hours. So now we have this pile…this +60GB pile…of extended events trace data. We can immediately remove 50GB from the pile (don’t need the trace data from the other 5 servers) leaving us with 10GB. Plus we don’t need to review all ~36hrs of logs for that one server which cuts it down a bit more. What we were left with was ~3GB of trace data (broken up into 32MB files) that needed to be loaded into a table for review.

So, with the trace files on my laptop, I was ready to start shredding some extended events data!

Just one problem…the method I’d come to know and love save in my scripts folder was too slow!

Basic

If you’ve worked w/ Analysis Services extended events in the past, then you’re probably aware that there’s not really a good utility (i.e. one w/ a GUI) to use when analyzing the trace files. What you’re left with is some ugly XML-shredding query like this one…

SELECT xe_file.[FILE_NAME] AS [filepath] ,[ApplicationName] = xe_file.xml_data.value('(/event/data[@name=''ApplicationName'']/value)[1]','[nvarchar](128)') ,[BinaryData] = xe_file.xml_data.value('(/event/data[@name=''BinaryData'']/value)[1]','[varbinary](max)') ,[ConnectionID] = CAST(xe_file.xml_data.value('(/event/data[@name=''ConnectionID'']/value)[1]','[nvarchar](128)') AS INT) ,[CPUTime] = CAST(xe_file.xml_data.value('(/event/data[@name=''CPUTime'']/value)[1]','[nvarchar](128)') AS bigint) ,[CurrentTime] = CAST(xe_file.xml_data.value('(/event/data[@name=''CurrentTime'']/value)[1]','[nvarchar](128)') AS DATETIME2) ,[DatabaseName] = xe_file.xml_data.value('(/event/data[@name=''DatabaseName'']/value)[1]','[nvarchar](128)') ,[Duration] = xe_file.xml_data.value('(/event/data[@name=''Duration'']/value)[1]','[nvarchar](128)') ,[EndTime] = xe_file.xml_data.value('(/event/data[@name=''EndTime'']/value)[1]','[nvarchar](128)') ,[Error] = CAST(xe_file.xml_data.value('(/event/data[@name=''Error'']/value)[1]','[nvarchar](128)') AS INT) ,[EventClassId] = CAST(xe_file.xml_data.value('(/event/data[@name=''EventClass'']/value)[1]','[nvarchar](128)') AS INT) ,[EventSubclassId] = CAST(xe_file.xml_data.value('(/event/data[@name=''EventSubclass'']/value)[1]','[nvarchar](128)') AS INT) ,[IntegerData] = CAST(xe_file.xml_data.value('(/event/data[@name=''IntegerData'']/value)[1]','[nvarchar](128)') AS INT) ,[JobID] = CAST(xe_file.xml_data.value('(/event/data[@name=''JobID'']/value)[1]','[nvarchar](128)') AS INT) ,[NTCanonicalUserName] = xe_file.xml_data.value('(/event/data[@name=''NTCanonicalUserName'']/value)[1]','[nvarchar](128)') ,[ObjectID] = xe_file.xml_data.value('(/event/data[@name=''ObjectID'']/value)[1]','[nvarchar](128)') ,[ObjectName] = xe_file.xml_data.value('(/event/data[@name=''ObjectName'']/value)[1]','[nvarchar](128)') ,[ObjectPath] = xe_file.xml_data.value('(/event/data[@name=''ObjectPath'']/value)[1]','[nvarchar](128)') ,[ObjectReference] = xe_file.xml_data.value('(/event/data[@name=''ObjectReference'']/value)[1]','[nvarchar](128)') ,[ObjectType] = CAST(xe_file.xml_data.value('(/event/data[@name=''ObjectType'']/value)[1]','[nvarchar](128)') AS INT) ,[ProgressTotal] = CAST(xe_file.xml_data.value('(/event/data[@name=''ProgressTotal'']/value)[1]','[nvarchar](128)') AS INT) ,[ServerName] = xe_file.xml_data.value('(/event/data[@name=''ServerName'']/value)[1]','[nvarchar](128)') ,[SessionID] = xe_file.xml_data.value('(/event/data[@name=''SessionID'']/value)[1]','[nvarchar](50)') ,[SessionType] = xe_file.xml_data.value('(/event/data[@name=''SessionType'']/value)[1]','[nvarchar](128)') ,[Severity] = CAST(xe_file.xml_data.value('(/event/data[@name=''Severity'']/value)[1]','[nvarchar](128)') AS INT) ,[SPID] = CAST(xe_file.xml_data.value('(/event/data[@name=''SPID'']/value)[1]','[nvarchar](128)') AS INT) ,[StartTime] = CAST(xe_file.xml_data.value('(/event/data[@name=''StartTime'']/value)[1]','[nvarchar](128)') AS DATE) ,[Success] = CAST(xe_file.xml_data.value('(/event/data[@name=''Success'']/value)[1]','[nvarchar](128)') AS INT) ,[TextData] = xe_file.xml_data.value('(/event/data[@name=''TextData'']/value)[1]','[nvarchar](max)') FROM ( SELECT [FILE_NAME] ,[XML_DATA] = CONVERT(XML, EVENT_DATA) FROM sys.fn_xe_file_target_read_file(@trace_filepath, NULL, NULL, NULL) ) AS xe_file ;

This is by far the most common method for extracting Analysis Services extended events data from the xel file and loading it into a table. However, on my workstation – technically it was a hyper-V client allocated w/ 2 cores, 8GB of memory and SSD storage – it took 5 minutes to process a single 32MB file of trace data. Using basic extrapolation, it was going to take ~8 hours to load the 3GB of trace data into a table.

That’s a long time to wait so we started thinking about how to make this faster. But first we needed to understand which part was taking the most time. So we broke down the process a bit more.

First we loaded a pre-stage table with the output from the sys.fn_xe_file_target_read_file function…

/* Turn ON statistics */ SET STATISTICS IO ON SET STATISTICS TIME ON DECLARE @trace_filepath NVARCHAR(2048) = 'C:\TEMP\InstID0000000999_xEvent_20150923182409_0_130875206512420000*.xel'; /* load xel file into table w/ XML column */ INSERT INTO dbo.xel ( FILE_NAME ,xml_data ) SELECT [FILE_NAME] ,[XML_DATA] = CONVERT(XML, EVENT_DATA) FROM sys.fn_xe_file_target_read_file(@trace_filepath, NULL, NULL, NULL) ; /* Turn OFF statistics */ SET STATISTICS TIME OFF SET STATISTICS IO OFF

This part took only a few seconds. So it was the next step, when shredding the XML_DATA column, where all the time was being spent. So we started looking at resource usage and noticed that only a single CPU was pegged at 100% while the other was barely active. So we considered (allocating more CPU to the hyper-V client and) breaking up the work into 4 piles and doing 4 parallel shreds. We predicted this would cut our time down to ~2 hours.

Better

Around the same time, someone suggested we try an XML index.

Note: I’m not going to pretend like I understand what is happening under the covers w/ an XML index…suffice it to say I was very skeptical that this would improve performance in any significant way. I mean – we still had to scan all of the XML, right? Whatever. You can read up on XML and XML indexes on your own – I still don’t quite feel comfortable with them and I still don’t like XML shredding!

To use an XML index, we first needed to create an XML SCHEMA COLLECTION (something most of us have probably only done when preparing for a certification) and change the definition of our pre-stage table…

CREATE XML SCHEMA COLLECTION [dbo].[xEventTrace_EventData] AS N'<xs:schema attributeFormDefault="unqualified" elementFormDefault="qualified" xmlns:xs="http://www.w3.org/2001/XMLSchema"> <xs:element name="event"> <xs:complexType> <xs:sequence> <xs:element name="data" maxOccurs="unbounded" minOccurs="0"> <xs:complexType> <xs:sequence> <xs:element type="xs:string" name="value"/> <xs:element type="xs:string" name="text" minOccurs="0"/> </xs:sequence> <xs:attribute type="xs:string" name="name" use="optional"/> </xs:complexType> </xs:element> <xs:element name="action" maxOccurs="unbounded" minOccurs="0"> <xs:complexType> <xs:sequence> <xs:element type="xs:string" name="value"/> </xs:sequence> <xs:attribute type="xs:string" name="name" use="optional"/> <xs:attribute type="xs:string" name="package" use="optional"/> </xs:complexType> </xs:element> </xs:sequence> <xs:attribute type="xs:string" name="name"/> <xs:attribute type="xs:string" name="package"/> <xs:attribute type="xs:dateTime" name="timestamp"/> </xs:complexType> </xs:element> </xs:schema>' ; GO /* prestage XEL data */ IF OBJECT_ID('dbo.xel','U') IS NOT NULL BEGIN; DROP TABLE dbo.xel; END ; CREATE TABLE dbo.xel ( [Id] [int] IDENTITY(1,1) NOT NULL PRIMARY KEY ,[FILE_NAME] [nvarchar](260) NOT NULL ,[xml_data] XML(CONTENT dbo.[xEventTrace_EventData]) NOT NULL ) ; GO

Now we’re ready to load up our stage table and create an XML index on the XML_DATA column…

/* load xel files into table w/ XML column */ INSERT INTO dbo.xel ( FILE_NAME ,xml_data ) SELECT [FILE_NAME] ,[XML_DATA] = CONVERT(XML, EVENT_DATA) FROM sys.fn_xe_file_target_read_file(@trace_filepath, NULL, NULL, NULL) ; /* Create Primary XML Index */ CREATE PRIMARY XML INDEX PXML_Data ON dbo.xel (xml_data);

Then we could simply run the last step to shred the XML and load the results into a table.

Boy was I surprised to see such a performance improvement!

This method dropped the time it took to process a single 32MB file from ~5 minutes down to ~2 minutes…

- 7 seconds for loading the pre-stage table

- 10 seconds for creating the XML index

- rest of the time for shredding and loading into the final temp table

This would get us through the 3GB of trace data in 3.2 hours. And we still only saw 1 CPU being pegged which means we could bring the time down to under 1 hour.

So we’re good right? For this client, yes, that was good enough. However, a few weeks after that engagement, I stumbled upon another method that performs even better!

Best (so far)

Warning, this method involves the use of .NET – proceed with caution! That said, please proceed because the results are certainly worth it.

The same 32MB file that took 5 minutes using the first method, 2 minutes using the second method, now only took ~3 seconds using this method. Also, the processing time is essentially linear – so even when we bump it up to 4 files (or ~128MB of data) its only going to take ~12 seconds. This would have brought us down to under 5 minutes to process all 3GB of data.

Again, I’m not a .NET expert (not really even a novice) so the code I’m sharing is most likely terrible…but it works (at least for me) and its fast. So here it is…read through it and feel free to heckle me in the comments 😉

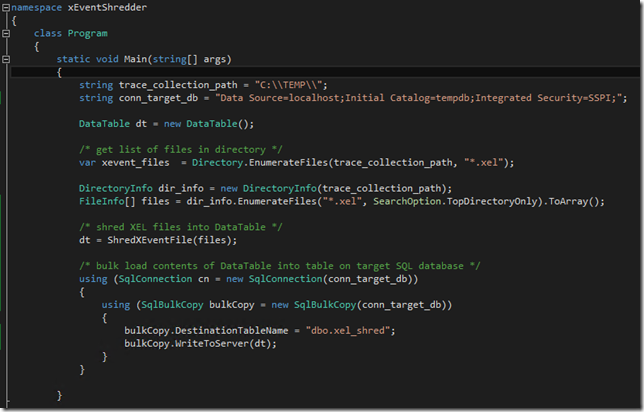

Below is a screenshot of Main giving you an idea of how the code works from the comments…

By the way, you’ll need to add references to the following libraries…

Once you’ve built the console app, you can move back to your SSMS-comfort-zone and call it like so…

/* Turn ON statistics */ SET STATISTICS IO ON SET STATISTICS TIME ON EXEC xp_cmdshell 'C:\TEMP\xEventShredder\bin\Debug\xEventShredder.exe'; /* Turn OFF statistics */ SET STATISTICS TIME OFF SET STATISTICS IO OFF

The SET-STATISTICS commands are simply there for timing the execution. And you’ll probably want to parameterize the app-code to pass in a target directory and other details so that you don’t have to edit the code and rebuild each time you want to make a change.

And that’s it. Happy Holidays!

![image[7] image[7]](https://byobi.wordpress.com/wp-content/uploads/2021/05/e3c4e-image7_thumb.png?w=232&h=244)

15 replies on “3 Methods for Shredding Analysis Services Extended Events”

thanks for the solution

LikeLike

welcome 🙂

LikeLike

Great post, Bill. A question and a suggestion:

First, how did you determine the data types for the destination table? Specifically, are the string lengths for the fields documented anywhere? My GoogleFu has not turned up anything.

Second, in your code, you create a SqlConnection object but you don’t actually use it; You are instantiating the SqlBulkCopy object with the connection string not the SqlConnection object.

LikeLike

Lance – totally sorry I missed these questions…

a) no magic – just picked (what I considered reasonable) data types and made several adjustments via trial and error. However, I noticed that w/ SSAS 2016, using the xEvents gui, there are data types listed in one of the windows…but I can’t recall if they include length (for data types like varchar).

b) good catch – just another example of how terrible my C# skills are 😉

LikeLike

[…] written about extended events for Analysis Services quite a bit (here, here, here, here, here) because I think they’re super helpful and very important in managing/monitoring an […]

LikeLike

[…] the trace, once you think the issue has occurred, and load the xEvent trace data into a table for […]

LikeLike

Hi, thanks for this great post. I have small doubt related to event parsing and extracting useful information out of it. I have started extended event with query_end, command_end & resource_usage. Now, during parsing the xel, how can I relate query_end / command_end to its corresponding resource usage. I need to do so, because resource_usage contains important information, like reads, writes etc, about executed query / command whose details have been captured with query_end / command_end.

LikeLike

Hi Vinit,

To correlate the CommandEnd/QueryEnd events with their corresponding ResourceUsage events, you can use RequestID field from the xevent trace (which for some reason I forgot to include in the example above)…

another option (if you’re using SSAS 2016) is to use the ActivityIDxfer field which is what we’re using in the following project: https://github.com/marcosqlbi/SsasEventsAnalyzer

LikeLike

I appreciated your posting. This started me down the path of developing my own powershell script that will dynamically define table definitions and bulk copy from the xel files. I posted a version 1 attempt on github. Feel free to try it out: https://github.com/mgraham-cracker/ExtendedEvents-BulkLoadParser

LikeLike

very cool – thanks for sharing… looking forward to testing it out (hopefully later this week)

LikeLike

Hi Bill, and thanks for this great post.

I am curious about that .NET solution and specifically about the “ShredExEventFile” method into which you pass the array of .xel files.

Does that function reside in the sql server.XE libraries you reference? I have searched online but I do not find any information or documentation on that method or those referenced libriaries. Do you have any links?

Thanks!

Martin

LikeLike

Hi Martin, code for the ShredExEventFile method isn’t shown in the image above… but it is included in the zip file link for download… http://byobi.com/wp-content/uploads/2015/12/xEventShredder.zip

LikeLike

Ah of course, I missed the link to the zip.

Now I see the method, thanks a bunch for sharing!

Martin

LikeLike

is there a way to get the Measures from the TEXTDATA field ?

LikeLike

unfortunately, there’s not an easy way to parse the measures from the textdata field. Assuming you had a list of all measures in the cube and the lineage (i.e. measures referencing measures), you’d still need to traverse some (potentially complex) query-scoped measures.

i like where you’re head is at!

LikeLike